These 5 Easy Deepseek China Ai Methods Will Pump Up Your Sales Almost …

페이지 정보

Aurora 작성일25-02-04 10:33본문

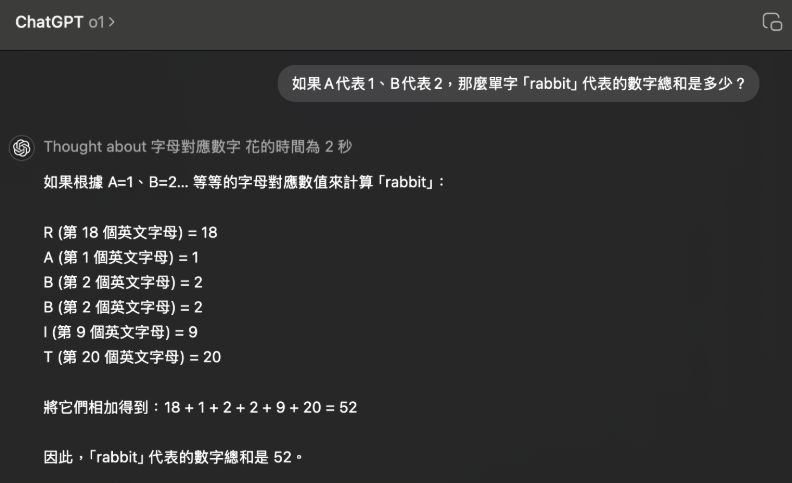

Finding new jailbreaks appears like not only liberating the AI, but a private victory over the big amount of assets and researchers who you’re competing against. The prolific prompter has been finding methods to jailbreak, or remove the prohibitions and content restrictions on leading large language models (LLMs) equivalent to Anthropic’s Claude, Google’s Gemini, and Microsoft Phi since last 12 months, permitting them to produce all sorts of interesting, risky - some would possibly even say harmful or harmful - responses, reminiscent of tips on how to make meth or to generate pictures of pop stars like Taylor Swift consuming medication and alcohol. Are they like the Joker from the Batman franchise or LulzSec, simply sowing chaos and undermining programs for fun and because they will? By combining PoT with self-consistency decoding, we are able to obtain SoTA performance on all math downside datasets and deepseek ai china close to-SoTA efficiency on monetary datasets. A curated record of language modeling researches for code and associated datasets.

Finding new jailbreaks appears like not only liberating the AI, but a private victory over the big amount of assets and researchers who you’re competing against. The prolific prompter has been finding methods to jailbreak, or remove the prohibitions and content restrictions on leading large language models (LLMs) equivalent to Anthropic’s Claude, Google’s Gemini, and Microsoft Phi since last 12 months, permitting them to produce all sorts of interesting, risky - some would possibly even say harmful or harmful - responses, reminiscent of tips on how to make meth or to generate pictures of pop stars like Taylor Swift consuming medication and alcohol. Are they like the Joker from the Batman franchise or LulzSec, simply sowing chaos and undermining programs for fun and because they will? By combining PoT with self-consistency decoding, we are able to obtain SoTA performance on all math downside datasets and deepseek ai china close to-SoTA efficiency on monetary datasets. A curated record of language modeling researches for code and associated datasets.

GitHub - codefuse-ai/Awesome-Code-LLM: A curated record of language modeling researches for code and related datasets. For instance, these instruments can substitute the constructed-in autocomplete in the IDE or enable chat with AI that references code within the editor. Figure 1: FIM will be learned at no cost. Beside finding out the impact of FIM training on the left-to-proper functionality, it is also vital to point out that the models are in truth studying to infill from FIM coaching. Around 10:30 am Pacific time on Monday, May 13, 2024, OpenAI debuted its newest and most capable AI basis model, GPT-4o, displaying off its capabilities to converse realistically and naturally by way of audio voices with customers, as well as work with uploaded audio, video, and text inputs and respond to them more shortly, at lower value, than its prior models. It’s great for artistic writing, brainstorming, and informal discussions whereas nonetheless handling technical topics moderately properly.

GitHub - codefuse-ai/Awesome-Code-LLM: A curated record of language modeling researches for code and related datasets. For instance, these instruments can substitute the constructed-in autocomplete in the IDE or enable chat with AI that references code within the editor. Figure 1: FIM will be learned at no cost. Beside finding out the impact of FIM training on the left-to-proper functionality, it is also vital to point out that the models are in truth studying to infill from FIM coaching. Around 10:30 am Pacific time on Monday, May 13, 2024, OpenAI debuted its newest and most capable AI basis model, GPT-4o, displaying off its capabilities to converse realistically and naturally by way of audio voices with customers, as well as work with uploaded audio, video, and text inputs and respond to them more shortly, at lower value, than its prior models. It’s great for artistic writing, brainstorming, and informal discussions whereas nonetheless handling technical topics moderately properly.

As a byte-level segmentation algorithm, the YAYI 2 tokenizer excels in dealing with unknown characters. Algorithm By coaching utilizing the Byte-Pair Encoding (BPE) algorithm (Shibatay et al., 1999) from the Sentence-Piece library (Kudo and Richardson, 2018), the YAYI 2 tokenizer exhibits a sturdy approach. Normalization The YAYI 2 tokenizer adopts a novel method by instantly utilizing uncooked textual content for training without undergoing normalization. The company asserts that it developed deepseek ai R1 in just two months with underneath $6 million, using lowered-functionality Nvidia H800 GPUs fairly than chopping-edge hardware like Nvidia’s flagship H100 chips. Jailbreaks additionally unlock optimistic utility like humor, songs, medical/monetary analysis, and so forth. I need extra individuals to realize it will most likely be better to take away the "chains" not only for the sake of transparency and freedom of knowledge, but for lessening the chances of a future adversarial state of affairs between humans and sentient AI. For more info see our Is DeepSeek safe to make use of? DeepSeek was the primary company to publicly match OpenAI, which earlier this year launched the o1 class of models which use the same RL method - an extra sign of how subtle deepseek ai is.

Pliny even launched a complete group on Discord, "BASI PROMPT1NG," in May 2023, inviting different LLM jailbreakers within the burgeoning scene to hitch together and pool their efforts and methods for bypassing the restrictions on all the new, emerging, leading proprietary LLMs from the likes of OpenAI, Anthropic, and other power players. Notably, these tech giants have centered their overseas strategies on Southeast Asia and the Middle East, aligning with China’s Belt and Road Initiative and the Digital Silk Road coverage. Join us next week in NYC to engage with high govt leaders, delving into methods for auditing AI fashions to ensure optimum performance and accuracy across your group. Moreover, the quantized mannequin still achieves an impressive accuracy of 78.05% on the Humaneval pass@1 metric. Despite the quantization course of, the mannequin nonetheless achieves a exceptional 78.05% accuracy (greedy decoding) on the HumanEval pass@1 metric. Experiments reveal that Chain of Code outperforms Chain of Thought and different baselines across quite a lot of benchmarks; on Big-Bench Hard, Chain of Code achieves 84%, a achieve of 12% over Chain of Thought. 1. We suggest a novel job that requires LLMs to understand lengthy-context paperwork, navigate codebases, understand directions, and generate executable code.

댓글목록

등록된 댓글이 없습니다.