The Talk Over Deepseek Chatgpt

페이지 정보

Gladys Polson 작성일25-02-16 08:52본문

MINT-1T. MINT-1T, a vast open-source multimodal dataset, has been launched with one trillion textual content tokens and 3.Four billion photos, incorporating numerous content from HTML, PDFs, and ArXiv papers. It was educated on 14.Eight trillion tokens over approximately two months, using 2.788 million H800 GPU hours, at a cost of about $5.6 million. LARP is a novel video tokenizer designed to boost video era in autoregressive (AR) models by prioritizing international visual options over individual patch-primarily based particulars. Open supply replication of crosscoder on Gemma 2B. Anthropic recently printed two studies showcasing its novel interpretability method. It was previously believed that novel view synthesis depended closely on strong 3D inductive biases. Efforts are ongoing to mitigate these biases and ensure honest and unbiased interactions. MeshRet has developed an modern methodology for enhancing movement retargeting for 3D characters, prioritizing the preservation of physique geometry interactions from the outset. OpenWebVoyager presents instruments, datasets, and models designed to construct multimodal internet agents that can navigate and learn from actual-world net interactions. This dataset, roughly ten times bigger than previous collections, is meant to accelerate developments in massive-scale multimodal machine studying research. Learning to Handle Complex Constraints for Vehicle Routing Problems. Emphasizing a tailor-made studying expertise, the article underscores the importance of foundational skills in math, programming, and deep studying.

MINT-1T. MINT-1T, a vast open-source multimodal dataset, has been launched with one trillion textual content tokens and 3.Four billion photos, incorporating numerous content from HTML, PDFs, and ArXiv papers. It was educated on 14.Eight trillion tokens over approximately two months, using 2.788 million H800 GPU hours, at a cost of about $5.6 million. LARP is a novel video tokenizer designed to boost video era in autoregressive (AR) models by prioritizing international visual options over individual patch-primarily based particulars. Open supply replication of crosscoder on Gemma 2B. Anthropic recently printed two studies showcasing its novel interpretability method. It was previously believed that novel view synthesis depended closely on strong 3D inductive biases. Efforts are ongoing to mitigate these biases and ensure honest and unbiased interactions. MeshRet has developed an modern methodology for enhancing movement retargeting for 3D characters, prioritizing the preservation of physique geometry interactions from the outset. OpenWebVoyager presents instruments, datasets, and models designed to construct multimodal internet agents that can navigate and learn from actual-world net interactions. This dataset, roughly ten times bigger than previous collections, is meant to accelerate developments in massive-scale multimodal machine studying research. Learning to Handle Complex Constraints for Vehicle Routing Problems. Emphasizing a tailor-made studying expertise, the article underscores the importance of foundational skills in math, programming, and deep studying.

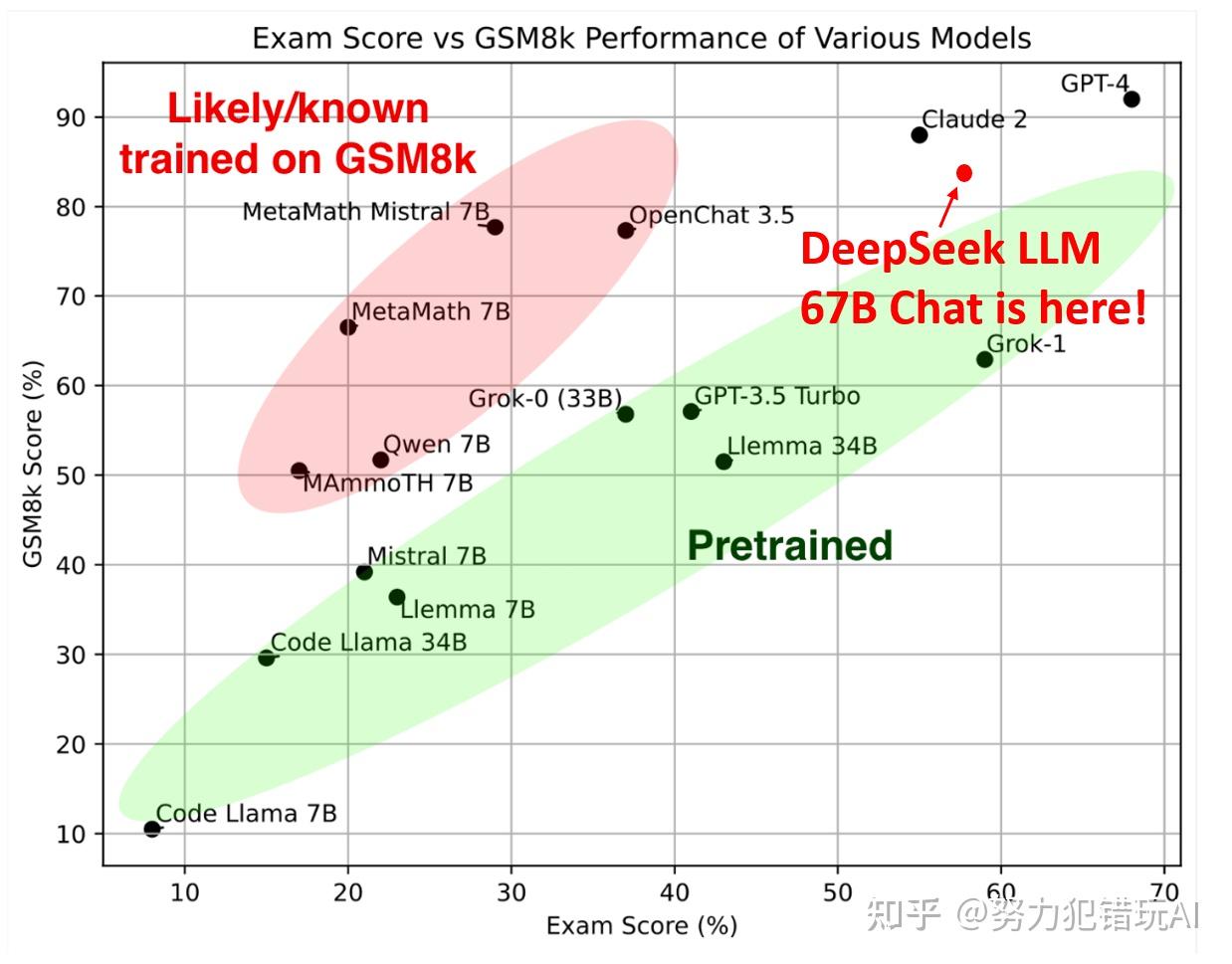

The mannequin's efficiency on these benchmarks underscores its skill to handle a variety of tasks, from high school-stage issues to professional-stage challenges. Quantization is a special method which reduces a model's measurement by changing the precision of its parameters. Later, on November 29, 2023, DeepSeek launched DeepSeek LLM, described as the "next frontier of open-supply LLMs," scaled up to 67B parameters. Despite the hit taken to Nvidia's market value, the DeepSeek fashions had been educated on around 2,000 Nvidia H800 GPUs, according to at least one research paper launched by the company. Decisions made this year will form the trajectories of frontier AI during a period of probably extraordinary progress, one which brings with it monumental upside possibilities as well as potentially grave dangers. Though nonetheless relatively new, Google believes this framework will play a vital function in helping enhance AI transparency. ThunderKittens. Thunder Kittens is a framework designed for creating extremely environment friendly GPU kernels.

The mannequin's efficiency on these benchmarks underscores its skill to handle a variety of tasks, from high school-stage issues to professional-stage challenges. Quantization is a special method which reduces a model's measurement by changing the precision of its parameters. Later, on November 29, 2023, DeepSeek launched DeepSeek LLM, described as the "next frontier of open-supply LLMs," scaled up to 67B parameters. Despite the hit taken to Nvidia's market value, the DeepSeek fashions had been educated on around 2,000 Nvidia H800 GPUs, according to at least one research paper launched by the company. Decisions made this year will form the trajectories of frontier AI during a period of probably extraordinary progress, one which brings with it monumental upside possibilities as well as potentially grave dangers. Though nonetheless relatively new, Google believes this framework will play a vital function in helping enhance AI transparency. ThunderKittens. Thunder Kittens is a framework designed for creating extremely environment friendly GPU kernels.

Researchers have developed a Proactive Infeasibility Prevention (PIP) framework designed to boost neural network performance on Vehicle Routing Problems (VRPs) that contons, and superior methods like Mixture of Experts. Just at this time we finalized a rule associated to elements, key elements of automobiles from the PRC or from Russia after which full-up vehicles that comprise these components. RATD operates in two steps: first, it retrieves relevant historic data from a database, and then uses this information as a reference to information the denoising part. Meta has printed a quick start information to help users construct a simplified model of Google’s common NotebookLM system. NotebookLlama: An Open Source model of NotebookLM. Open the LM models search engine by clicking this search icon from the top left pane. This post offers an open replication of the cross coder on the Gemma 2B model. CompassJudger-1 is the first open-source, complete choose mannequin created to boost the analysis course of for big language fashions (LLMs).

댓글목록

등록된 댓글이 없습니다.